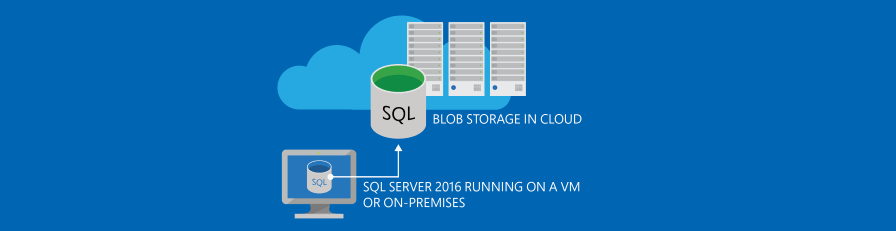

It is a good practice to keep multiple copies of our most precious data. By using on-premises SQL Server databases for AX 2012 or Dynamics 365 Finance and Operations, archiving SQL database backups to offsite-locations are a must. I have built automation for archiving SQL database backups using Azure Blob Storage.

Overview of the processes

Maintenance regime

Our maintenance regime looks like the following:

- 1x Weekly Full backup

- 6x Daily Differential backup

- 15 minute Transactional log backups

They are captured locally on the primary SQL instance, to keep the timestamps for last successful backups in our AlwaysOn cluster. Then we move the files to a shared network storage, which is visible to both High Availability sites, in case there is an outage and we need to a fail over and restore data.

In case of a natural disaster due to the close geographical proximity of the sites we needed an additional layer of safety.

Archiving offsite

Every night we are running a PowerShell script that uses the AzCopy utility. It is uploading our backup files on a Microsoft Azure cloud storage account.

You are paying for the network usage (IO) and the size occupied on the disks, so it is a good idea to have some sort of housekeeping. Our solution was to use an Azure RunBook to determine what to keep and what to delete. The current setup is to have 1 full backup file for a year available every quarter (4x 400 GB), and keep all full / differential / transactional files for the last month (4x 400 GB + 26x 10 GB).

This puts the total size occupied around 4 TB, and costs about 35 GBP a month using a cold storage. This price could go up if you also want to utilize a hot tier storage for the latest backup set. That is useful if you want to come back from the cloud on a short notice.

Detailed steps

Taking a backup

We are using the Maintenance script package from Ola Hallengren, and our command goes like the following. The script is stored in our Administration database, backup goes to a local folder and being split between 8 files, with an increased buffer count. It normally takes about 1 hour to complete for 2 TB.

sqlcmd -E -S $(ESCAPE_SQUOTE(SRVR)) -d Administration -Q "EXECUTE [dbo].[DatabaseBackup] @Databases = 'USER_DATABASES', @Directory = N'U:\Backup', @BackupType = 'FULL', @CopyOnly = 'N', @Verify = 'N', @CleanupTime = 336, @Compress = 'Y', @CheckSum = 'Y', @BufferCount = 2200, @MaxTransferSize = 4194304, @NumberOfFiles = 8, @LogToTable = 'Y'" -b

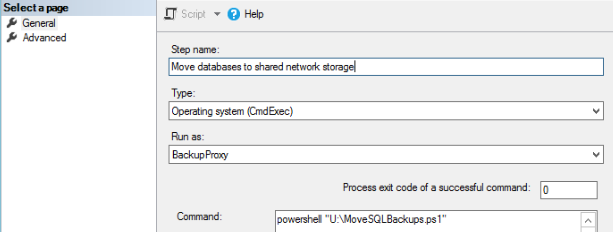

Then the second step is to copy off the databases to the shared network storage, using a PowerShell script:

Preparing Azure

First of all you need to sign on to https://portal.azure.com/ and create a new Azure Storage Account. We have created a StorageV2 General purpose storage, with a Locally-redundant storage. Then within Containers we have added a daxsql-backup folder.

You need to generate a new Destination key under Azure Storage Account > Access Keys and also take note of the URL.

Using AzCopy to transfer files

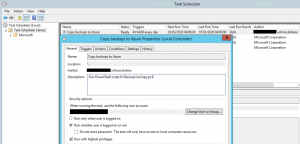

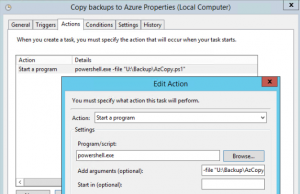

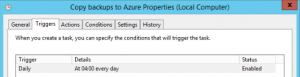

I have set up a Windows Task Scheduler entry on our Backup environment, which is responsible for copying the files to the Azure Blob Storage container. It is running a PowerShell command daily, timing-wise you should run it once the SQL backups have been taken and copied to the share.

The AzCopy.ps1 and the access parameters for BAK and TRN files could be found on my GitHub, see link at the bottom.

Please note that I have placed the tool in the default path under C:\Program Files (x86)\Microsoft SDKs\Azure\AzCopy\.

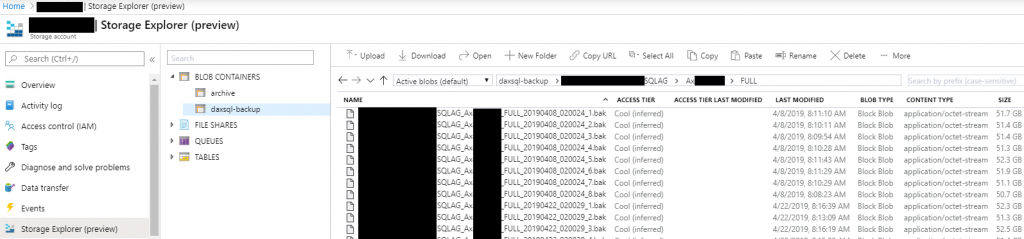

Validating the results of archiving SQL database backups

If you have set up everything correctly, then the files will land in your backup container. If something goes wrong, have a look at the AzCopy tool and the PowerShell log files.

Housekeeping

You should regularly clean up the Blob storage container, to reduce disk costs. We did this by creating an Azure RunBook, that is keeping or removing old files based on the following policies:

- Keep everything for the last 31 days

- Keep FULL backups from the 1st week of every quarter for 1 year

- Files must meet a specific file format, for us it is:

EN*_DatabaseName_BackupType_YYYYMMDD_HHMMSS_FileCount.BAK and .TRN

The RunBook is set to execute once per week.

The source code for all the files above are available on GitHub.

Leave A Comment