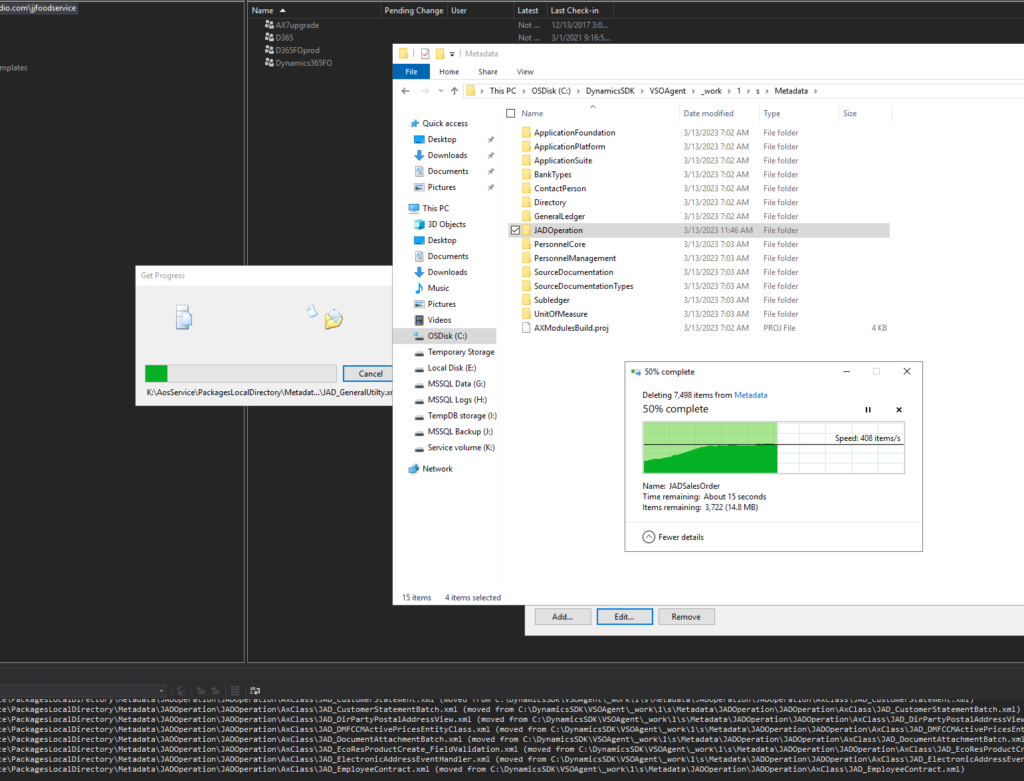

Data Entity refresh performance improvements

If we refresh the data entities due to changes to our metadata, it has been taking a while before. Microsoft tried to address this by moving the process out to run in a batch job, but there are things that we may still do to improve our DB performance and health. You can follow the tips below to carry out the Data Entity refresh performance improvements.

When checking the entity refresh on a local VHD, we have noticed in the query profiler that there is a potential covering index missing. We have extended the DMFTargetXML table:

- WIK_PerfIdx

- Partition

- Entity (included: yes)

- TargetField (included: yes)

- XMLField (included: yes)

- ArrayIndex (included: yes)

<?xml version="1.0" encoding="utf-8"?>

<AxTableExtension xmlns:i="http://www.w3.org/2001/XMLSchema-instance">

<Name>DMFTargetXML.WIKExtension</Name>

<FieldGroupExtensions />

<FieldGroups />

<FieldModifications />

<Fields />

<FullTextIndexes />

<Indexes>

<AxTableIndex>

<Name>WIK_PerfIdx</Name>

<AllowDuplicates>Yes</AllowDuplicates>

<Fields>

<AxTableIndexField>

<DataField>Partition</DataField>

</AxTableIndexField>

<AxTableIndexField>

<DataField>Entity</DataField>

<IncludedColumn>Yes</IncludedColumn>

</AxTableIndexField>

<AxTableIndexField>

<DataField>TargetField</DataField>

<IncludedColumn>Yes</IncludedColumn>

</AxTableIndexField>

<AxTableIndexField>

<DataField>XMLField</DataField>

<IncludedColumn>Yes</IncludedColumn>

</AxTableIndexField>

<AxTableIndexField>

<DataField>ArrayIndex</DataField>

<IncludedColumn>Yes</IncludedColumn>

</AxTableIndexField>

</Fields>

</AxTableIndex>

</Indexes>

<Mappings />

<PropertyModifications />

<RelationExtensions />

<RelationModifications />

<Relations />

</AxTableExtension>The other idea came from a blog article about SQL DMV views performing poorly when querying the DB metadata. The regular index/statistics update scripts do not touch system tables, but with the below snippet you may update statistics on those too, greatly improving performance.

--identify last stats update date

SELECT

QUOTENAME(OBJECT_SCHEMA_NAME(i.object_id)) + N'.' + QUOTENAME(OBJECT_NAME(i.object_id)) + N'.' + QUOTENAME(name) AS index_name

, STATS_DATE(i.object_id, i.index_id) AS stats_date

FROM sys.indexes AS I

JOIN sys.partitions AS p ON

p.object_id = i.object_id

AND p.index_id = i.index_id

WHERE

OBJECTPROPERTYEX(i.object_id, 'IsSystemTable') = 1

AND i.index_id > 0

AND p.rows > 0;

--use XML PATH for SQL 2016 and earlier

DECLARE @SQL nvarchar(MAX) =

(

SELECT

N'UPDATE STATISTICS '

+ QUOTENAME(OBJECT_SCHEMA_NAME(i.object_id))

+ N'.'

+ QUOTENAME(OBJECT_NAME(i.object_id))

+ N';'

FROM sys.indexes AS i

JOIN sys.partitions AS p ON

p.object_id = i.object_id

AND p.index_id = i.index_id

WHERE

OBJECTPROPERTYEX(i.object_id, 'IsSystemTable') = 1

AND i.index_id > 0

AND p.rows > 0

FOR XML PATH(''), TYPE).value('.','nvarchar(MAX)'

);

EXEC sp_executesql @SQL;

--use STRING_AGG() in SQL 2017 and later, including Azure SQL Database

DECLARE @SQL nvarchar(MAX) =

(

SELECT

STRING_AGG(

N'UPDATE STATISTICS '

+ QUOTENAME(OBJECT_SCHEMA_NAME(i.object_id))

+ N'.'

+ QUOTENAME(OBJECT_NAME(i.object_id))

+ N';'

,'')

FROM sys.indexes AS i

JOIN sys.partitions AS p ON

p.object_id = i.object_id

AND p.index_id = i.index_id

WHERE

OBJECTPROPERTYEX(i.object_id, 'IsSystemTable') = 1

AND i.index_id > 0

AND p.rows > 0

);

EXEC sp_executesql @SQL;Carrying out the above steps should help to get back to development and testing faster after a Data Entity refresh.