Out of control TempDB growth

Sometimes we have noticed that our SQL health report has shown an out of control TempDB growth. It has happened for example when we have triggered bulk invoiced sales order cleanup, or some other larger processes. You can find out more about monitoring TempDB growth for version store in these articles:

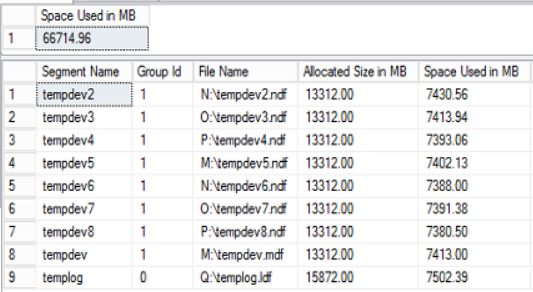

Running the following statement can tell you the currently occupied space overall, and per file in your TempDB. Our normal usage is around 2 GB, so having a 67GB space used looked very suspicious.

SELECT SUM(CAST(FILEPROPERTY(name, 'SpaceUsed')/128.0 AS DECIMAL(10,2))) AS [Space Used in MB] FROM sysfiles SELECT RTRIM(name) AS [Segment Name], groupid AS [Group Id], filename AS [File Name], CAST(size/128.0 AS DECIMAL(10,2)) AS [Allocated Size in MB], CAST(FILEPROPERTY(name, 'SpaceUsed')/128.0 AS DECIMAL(10,2)) AS [Space Used in MB], CAST([maxsize]/128.0 AS DECIMAL(10,2)) AS [Max in MB], CAST([maxsize]/128.0-(FILEPROPERTY(name, 'SpaceUsed')/128.0) AS DECIMAL(10,2)) AS [Available Space in MB] FROM sysfiles ORDER BY groupid DESC

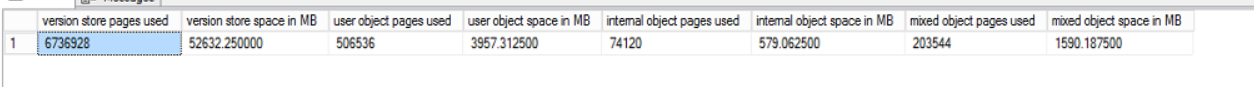

This query shows the composition of data within the TempDB files. It was immediately apparent that it is not the user/system data that is taking up the space, but the so called version store. Normally that should be regularly cleaned up by an internal process, unless there is a dead thread/blocking/something broken within SQL:

SELECT --SUM(allocated_extent_page_count) AS [allocated extent pages used], --(SUM(allocated_extent_page_count)*1.0/128) AS [allocated extent space in MB], SUM(version_store_reserved_page_count) AS [version store pages used], (SUM(version_store_reserved_page_count)*1.0/128) AS [version store space in MB], SUM(user_object_reserved_page_count) AS [user object pages used], (SUM(user_object_reserved_page_count)*1.0/128) AS [user object space in MB], SUM(internal_object_reserved_page_count) AS [internal object pages used], (SUM(internal_object_reserved_page_count)*1.0/128) AS [internal object space in MB], SUM(mixed_extent_page_count) AS [mixed object pages used], (SUM(mixed_extent_page_count)*1.0/128) AS [mixed object space in MB] FROM sys.dm_db_file_space_usage;

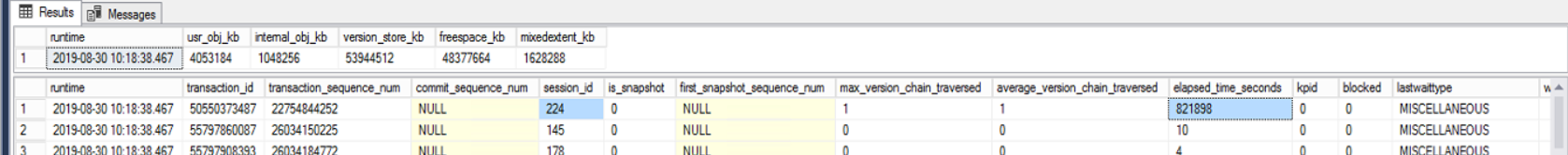

The following query has shown that session 224 was active for several days, with a sleeping state, thus preventing this TempDB cleanup on the version store to run.

DECLARE @runtime datetime SET @runtime = GETDATE() PRINT '-- sys.dm_db_file_space_used' select CONVERT (varchar(30), @runtime, 121) AS runtime, SUM (user_object_reserved_page_count)*8 as usr_obj_kb, SUM (internal_object_reserved_page_count)*8 as internal_obj_kb, SUM (version_store_reserved_page_count)*8 as version_store_kb, SUM (unallocated_extent_page_count)*8 as freespace_kb, SUM (mixed_extent_page_count)*8 as mixedextent_kb FROM sys.dm_db_file_space_usage PRINT ' -- Output of active transactions which are using version store' select CONVERT (varchar(30), @runtime, 121) AS runtime,a.*,b.kpid,b.blocked,b.lastwaittype,b.waitresource,b.dbid,b.cpu,b.physical_io,b.memusage,b.login_time,b.last_batch,b.open_tran,b.status,b.hostname,b.program_name,b.cmd,b.loginame,request_id from sys.dm_tran_active_snapshot_database_transactions a inner join sys.sysprocesses b on a.session_id = b.spid PRINT ' -- Input buffer of SPIDs identified above Output of active transactions which are using version store' select CONVERT (varchar(30), @runtime, 121) AS runtime,b.spid,c.* from sys.dm_tran_active_snapshot_database_transactions a inner join sys.sysprocesses b on a.session_id = b.spid cross apply sys.dm_exec_sql_text(sql_handle) c PRINT ' -- Open cursors' select * from sys.dm_exec_cursors(0) a cross apply sys.dm_exec_sql_text(sql_handle) WHERE DATEDIFF(hh, a.creation_time, GETDATE()) > 1;

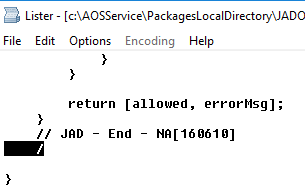

The above Session 224 was running on one of our AX integration AOS instances. As a quick fix, restarting the AX AOS service has released the API sessions.

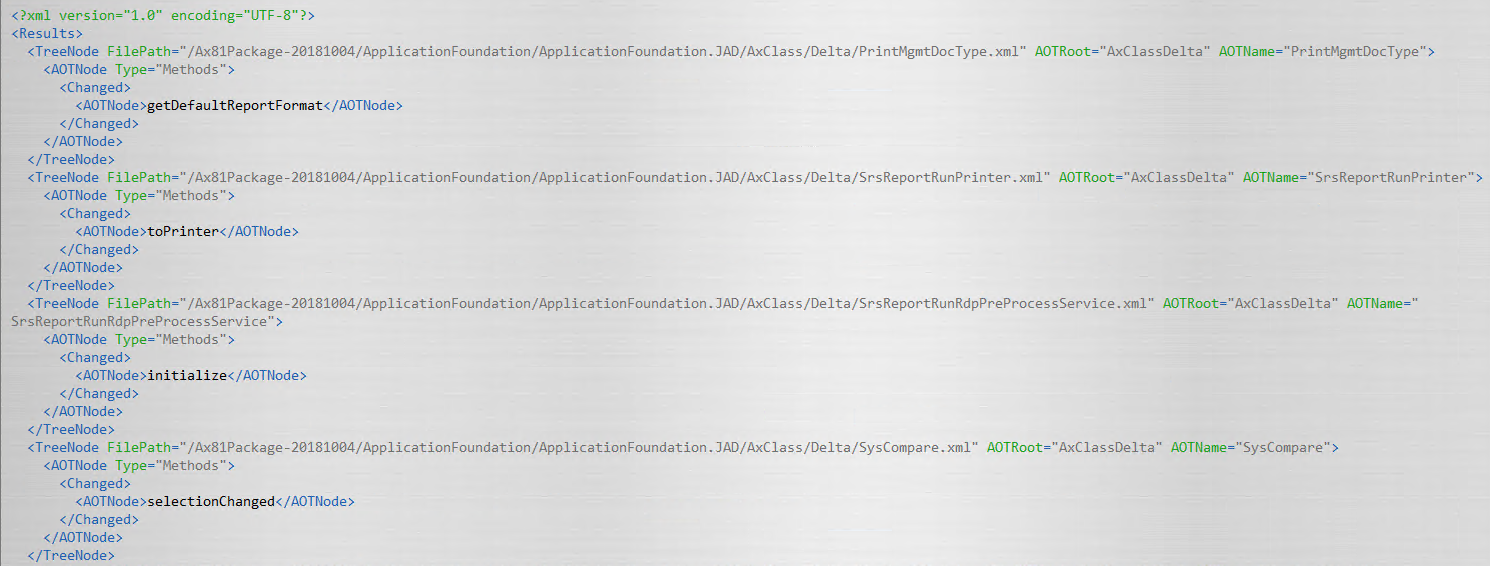

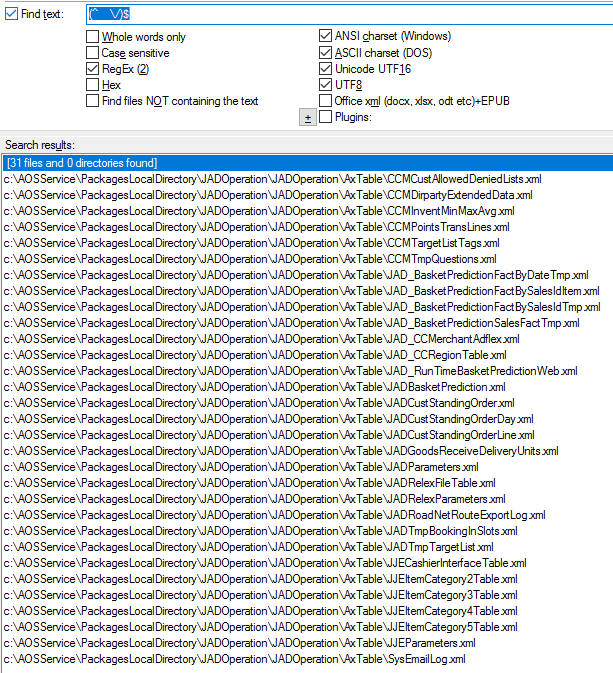

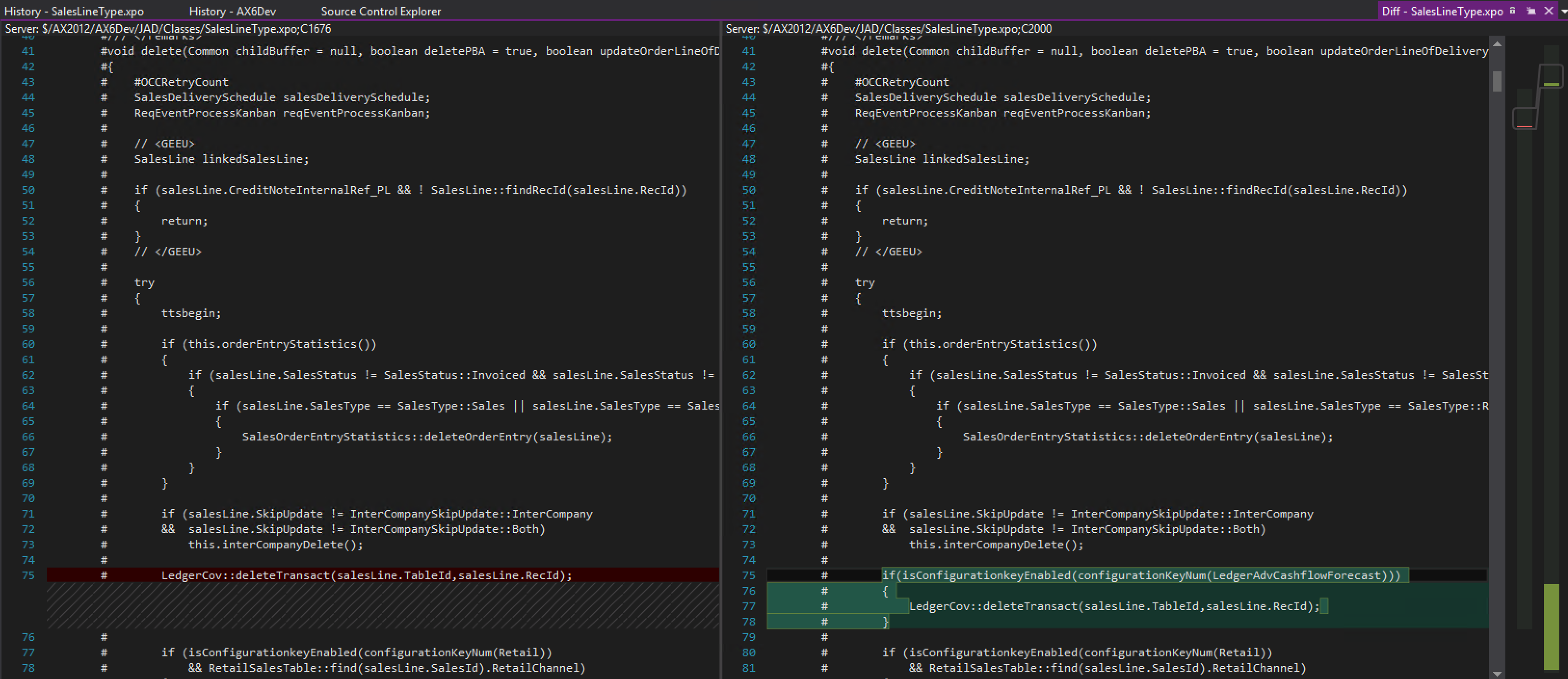

The root cause analysis has revealed that if we refer tables, which have their configuration keys turned off, it can generate TempDB entries. In our case the sales order deletion batch job was running SalesLineType::delete. It has a LedgerCov::deleteTransact call, which is a delete_from ledgerCov in a transaction block. We have introduced a configuration key check for the table, and that has eliminated the out of control TempDB growth issue.

We highly recommend to have a close look at your TempDB usage, and try to identify which tables with a disabled configuration key can have a big performance impact on your environment by running code unnecessarily.