Dynamics Virtual Community Summit

This year the usual AXUG Summit Europe has been delayed due to the Coronavirus pandemic, and is happening online now this week, called Dynamics Virtual Community Summit. You may join a lot of interesting sessions using the conference website.

I will be participating in 3 sessions:

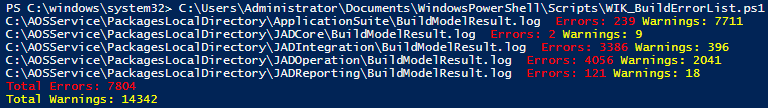

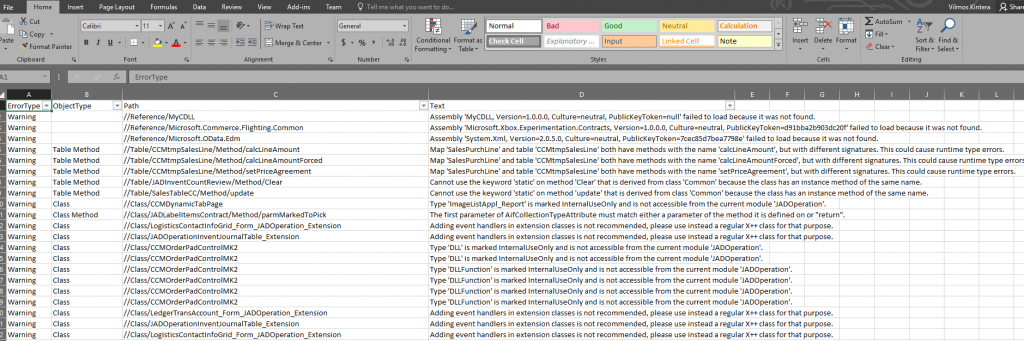

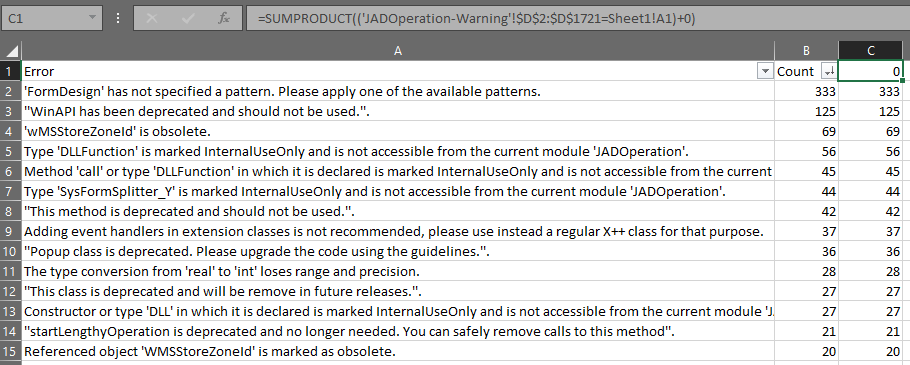

- Pushing the Limits of D365FO – An Enterprise-scale Data Upgrade

2020-07-01 11:00-12:00 CETHow do you make a decision about a green-field, or an upgrade implementation? What gold are you leaving behind if you do not get your data in the cloud? JJ Food Service has been on a journey upgrading their AX 2012 R3 environment to Dynamics 365 for Finance and Operations, with 15 years of historical data and a 2 TB database size, rolled forward since the beginning of time. Join our technical expert, Vilmos Kintera (Business Applications MVP) on this session to get first-hand experience of the challenges, tasks and logistics involved to be cloud-ready.

This is a slightly updated version of the webinar available on the Dynamics Zero-to-Hero YouTube channel. - What is the Latest in D365FO Application Development?

2020-07-02 12:15-13:15 CETLearn about the latest and greatest changes around Development and Application Life-cycle Management for Microsoft Dynamics 365 for Finance, Operations / Supply Chain Management. We will discuss recent and upcoming changes.

- Ask the Experts: A Roundtable for Finance and Operations Questions and Concerns

2020-07-03 15:30-16:00 CET

Join us for this roundtable session with finance and operations experts to discuss and answer questions that deal your most pressing issues. Some topics for conversation may include: local roll outs in various countries, data migration considerations, and more!